IRON: Inverse Rendering by Optimizing Neural SDFs and Materials from Photometric Images

CVPR 2022 (Oral)

Abstract

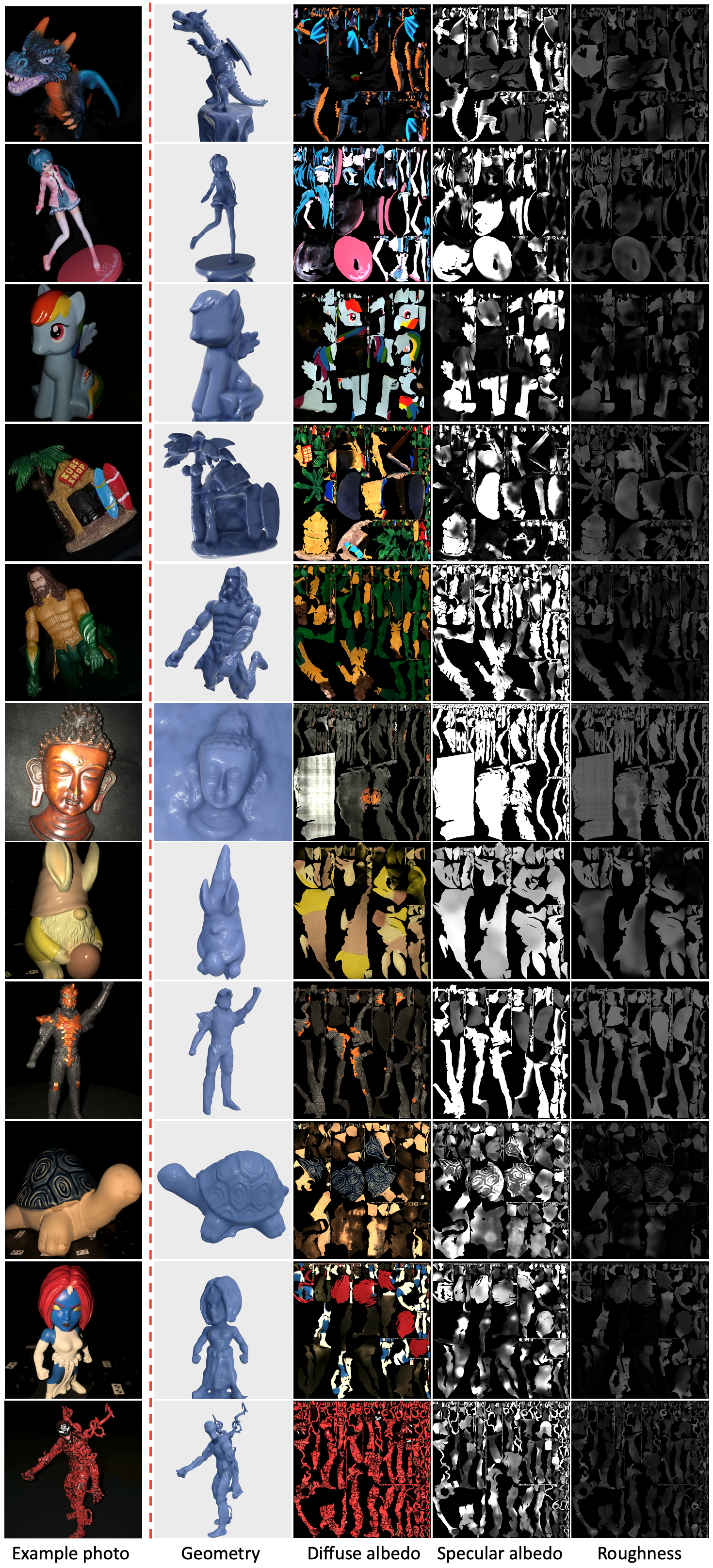

We propose a neural inverse rendering pipeline called IRON that operates on photometric images and outputs high-quality 3D content in the format of triangle meshes and material textures readily deployable in existing graphics pipelines. Our method adopts neural representations for geometry as signed distance fields (SDFs) and materials during optimization to enjoy their flexibility and compactness, and features a hybrid optimization scheme for neural SDFs: first, optimize using a volumetric radiance field approach to recover correct topology, then optimize further using edgeaware physics-based surface rendering for geometry refinement and disentanglement of materials and lighting. In the second stage, we also draw inspiration from mesh-based differentiable rendering, and design a novel edge sampling algorithm for neural SDFs to further improve performance. We show that our IRON achieves significantly better inverse rendering quality compared to prior works.

Example 3D assets created by IRON from multi-view photometric images of real objects.

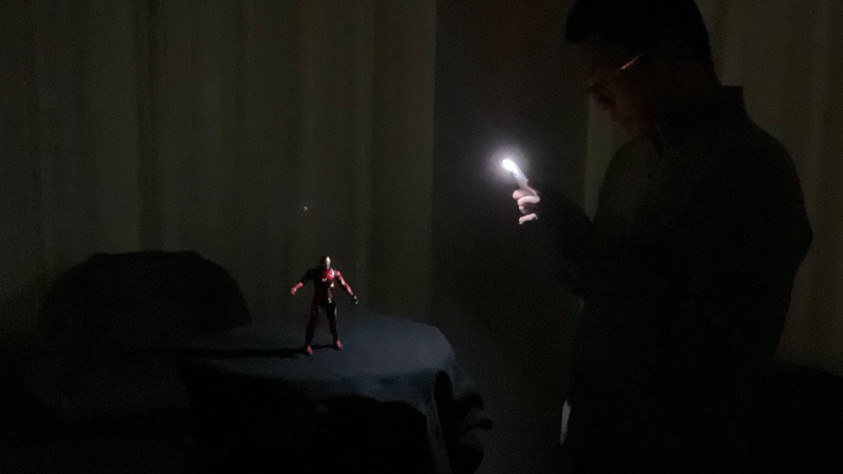

In practice, photometric images can be captured by a hand-held smartphone camera with LED flashlight turned on in a dark environment. The auto-exposure, auto-white balance, auto-focus should all be turned off. Usually, ~200 images should suffice for high-quality results.

BibTeX

@inproceedings{iron-2022,

title={IRON: Inverse Rendering by Optimizing Neural SDFs and Materials from Photometric Images},

author={Zhang, Kai and Luan, Fujun and Li, Zhengqi and Snavely, Noah},

booktitle={IEEE Conf. Comput. Vis. Pattern Recog.},

year={2022}

}Acknowledgements

This work was supported in part by the National Science Foundation (IIS-2008313), and by funding from Intel and Amazon Web Services. We also thank Sai Bi for providing their code and data.