Leveraging Vision Reconstruction Pipelines

for Satellite Imagery

Kai Zhang, Jin Sun, Noah Snavely

Cornell Tech, Cornell University

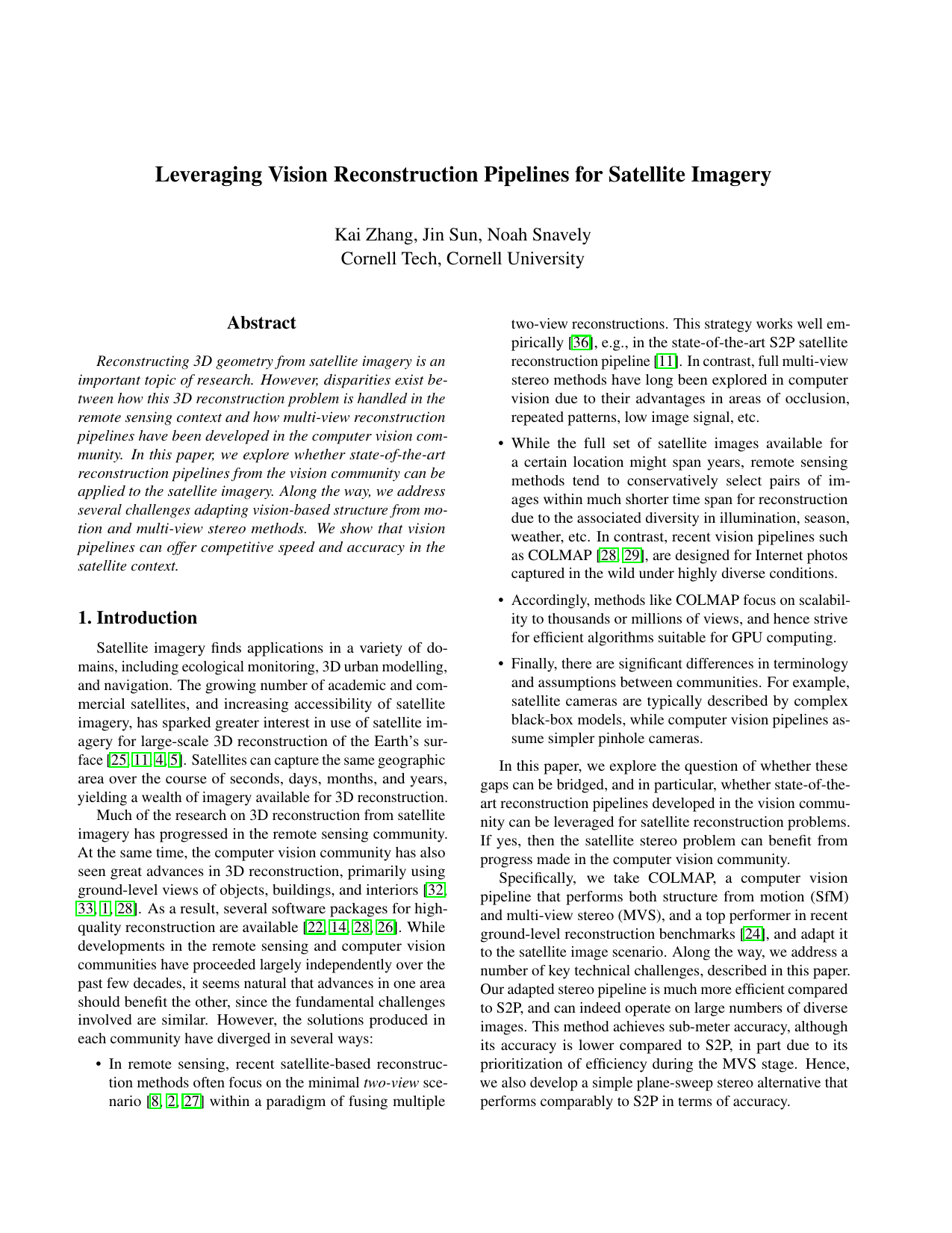

Reconstructing 3D geometry from satellite imagery is an important topic of research. However, disparities exist between how this 3D reconstruction problem is handled in the remote sensing context and how multi-view reconstruction pipelines have been developed in the computer vision community. In this project, we explore whether state-of-the-art reconstruction pipelines from the vision community can be applied to the satellite imagery. Along the way, we address several challenges adapting vision-based structure from motion and multi-view stereo methods. We show that vision pipelines can offer competitive speed and accuracy in the satellite context.

Paper

@inproceedings{VisSat-2019,

title={{Leveraging Vision Reconstruction Pipelines for Satellite Imagery}},

author={Zhang, Kai and Sun, Jin and Snavely, Noah},

booktitle={ICCV Workshop on 3D Reconstruction in the Wild (3DRW)},

year={2019}

}

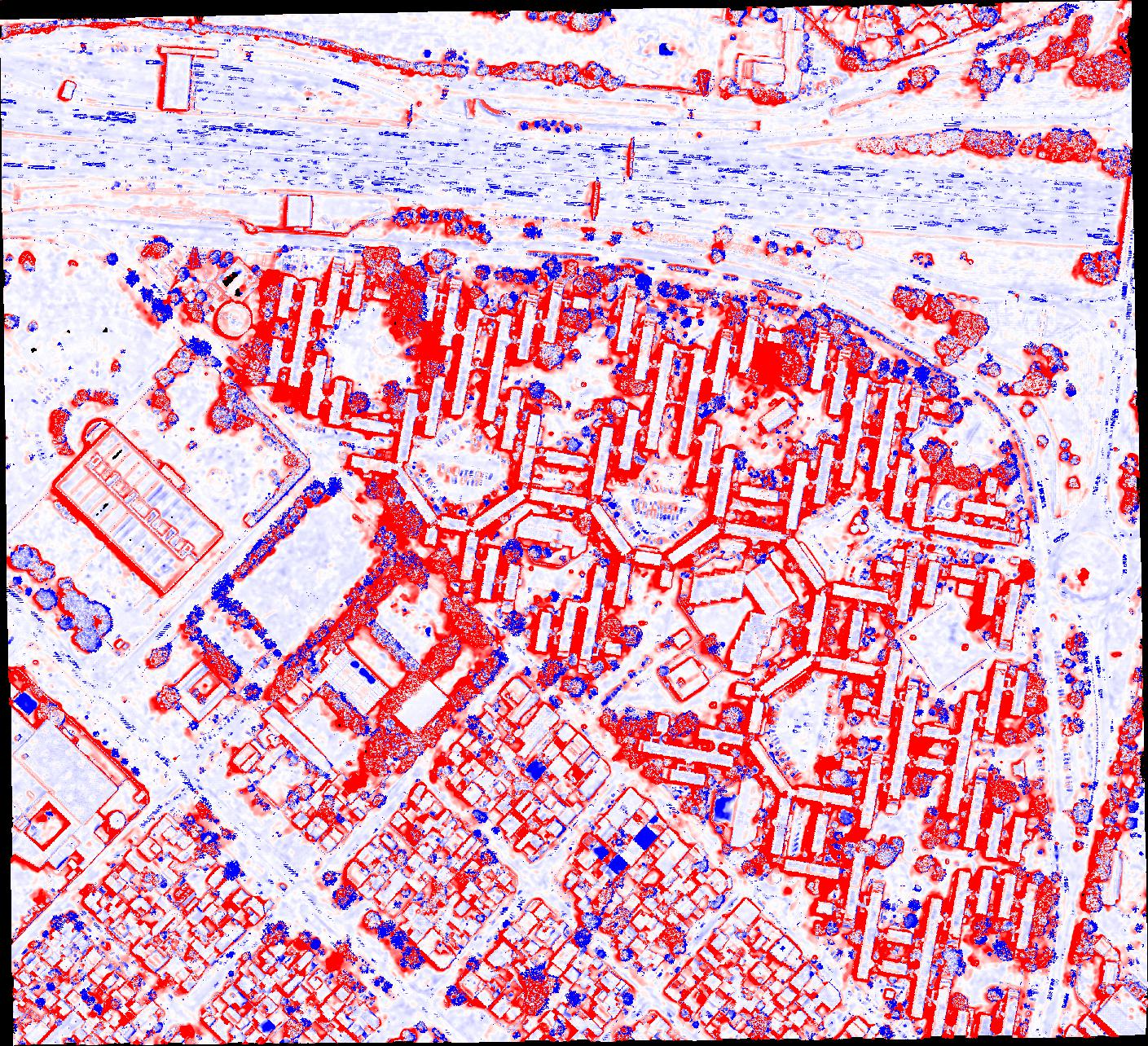

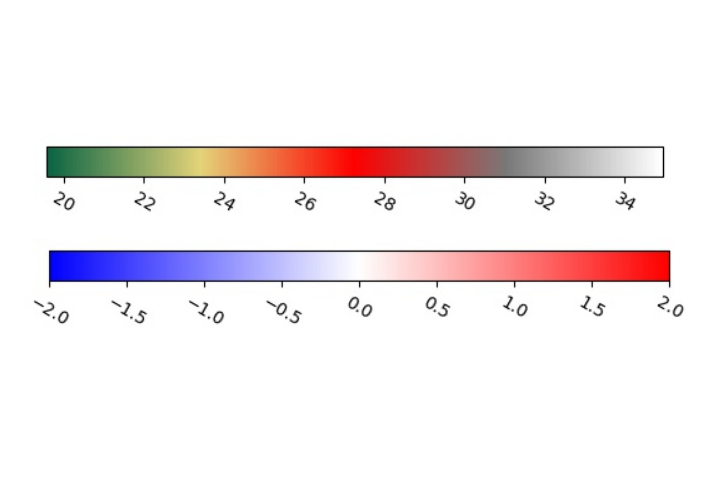

Example Result

Input Images

View all 47 input images [google drive link]. The resolution of these images is around 30 cm per pixel.

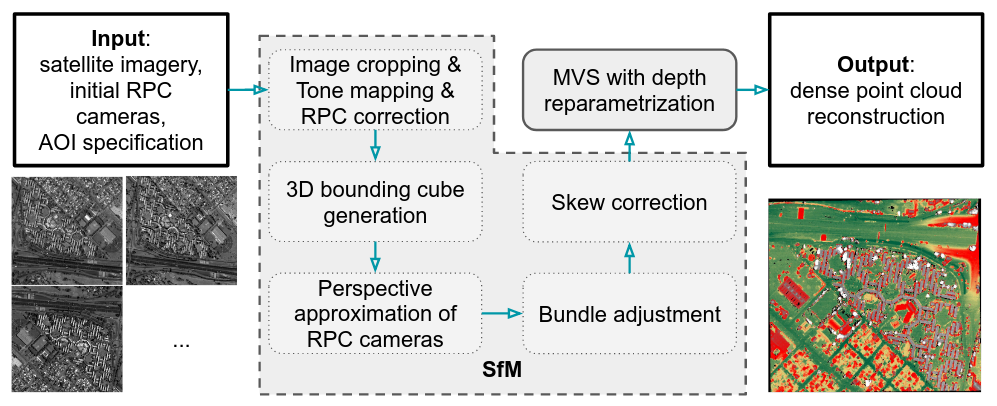

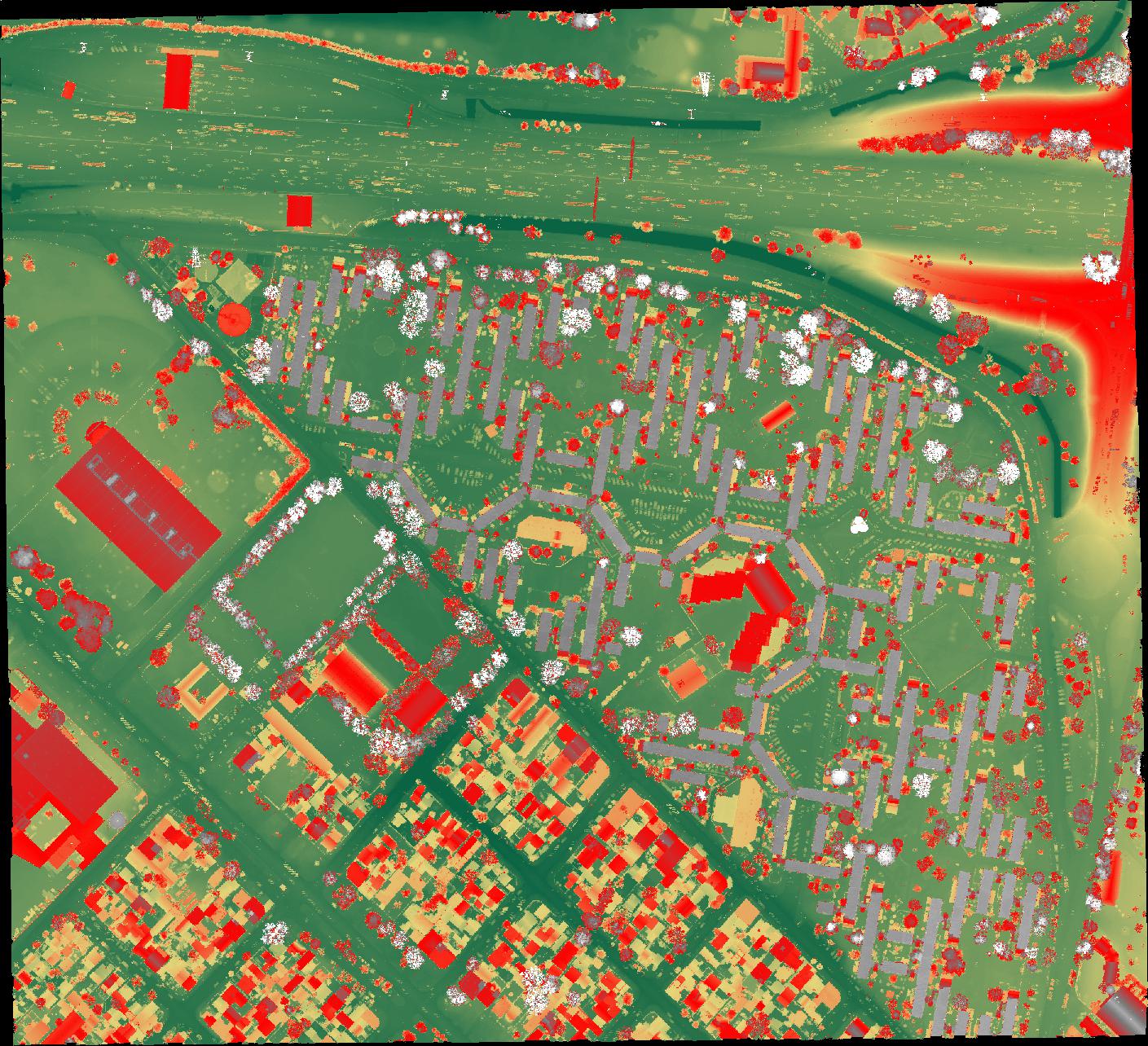

Reconstructed Point Cloud

Metric Numbers

Median height error is 0.315 meters; completeness score is 72.5%. (completeness score is defined as the percentage of non-empty ground-truth height map cells where the reconstructed height value exists and is within 1 meter of the ground-truth value.)

Our work makes it possible to apply state-of-the-art 3D reconstruction methods from the computer vision community to satellite images. We show that our method is competitive in accuracy compared to a state-of-the-art pipeline specific to satellite imagery, while also demonstrating scalability and efficiency. In the long run, our goal is to bridge the gap between 3D reconstruction methods in the computer vision and remote sensing communities.

Code

VisSatSatelliteStereo: view the readme on github.

ColmapForVisSat on github: backbone for VisSatSatelliteStereo.

VisSatToolSet on github: see the data section below for details about this repo.

rpc_triangulation_solver: view the readme on github.

SatellitePlaneSweep: view the readme on github.

GraphCutOnCostVolume: to be released.

Data

Our method (the SfM part of [VisSatSatelliteStereo]) converts the satellite imagery data to more conventional and accessible format:

- HDR images are tonemapped to LDR.

- RPC cameras are approximated with perspective cameras, which then are bundle-adjusted by our pipline.

You can download our data from google drive. If you perform Multi-view Stereo on these images and cameras, the reconstructed point cloud will be in a local ENU coordinate system. We provide this accompanying toolset [VisSatToolSet] to convert the points' coordinates to the global coordinate system, i.e., (UTM east, UTM north, altitude), and report its accuracy by comparing to the ground-truth.

Our data is based upon the public IARPA Multi-View Stereo 3D Mapping Challenge remote sensing dataset. If you use our transformed data in your work, please include the following citations:

- @inproceedings{VisSat-2019,

title={Leveraging Vision Reconstruction Pipelines for Satellite Imagery},

author={Zhang, Kai and Sun, Jin and Snavely, Noah},

booktitle={ICCV Workshop on 3D Reconstruction in the Wild (3DRW)},

year={2019}

} - @inproceedings{bosch2016multiple,

title={A multiple view stereo benchmark for satellite imagery},

author={Bosch, Marc and Kurtz, Zachary and Hagstrom, Shea and Brown, Myron},

booktitle={IEEE Applied Imagery Pattern Recognition Workshops},

year={2016}

}

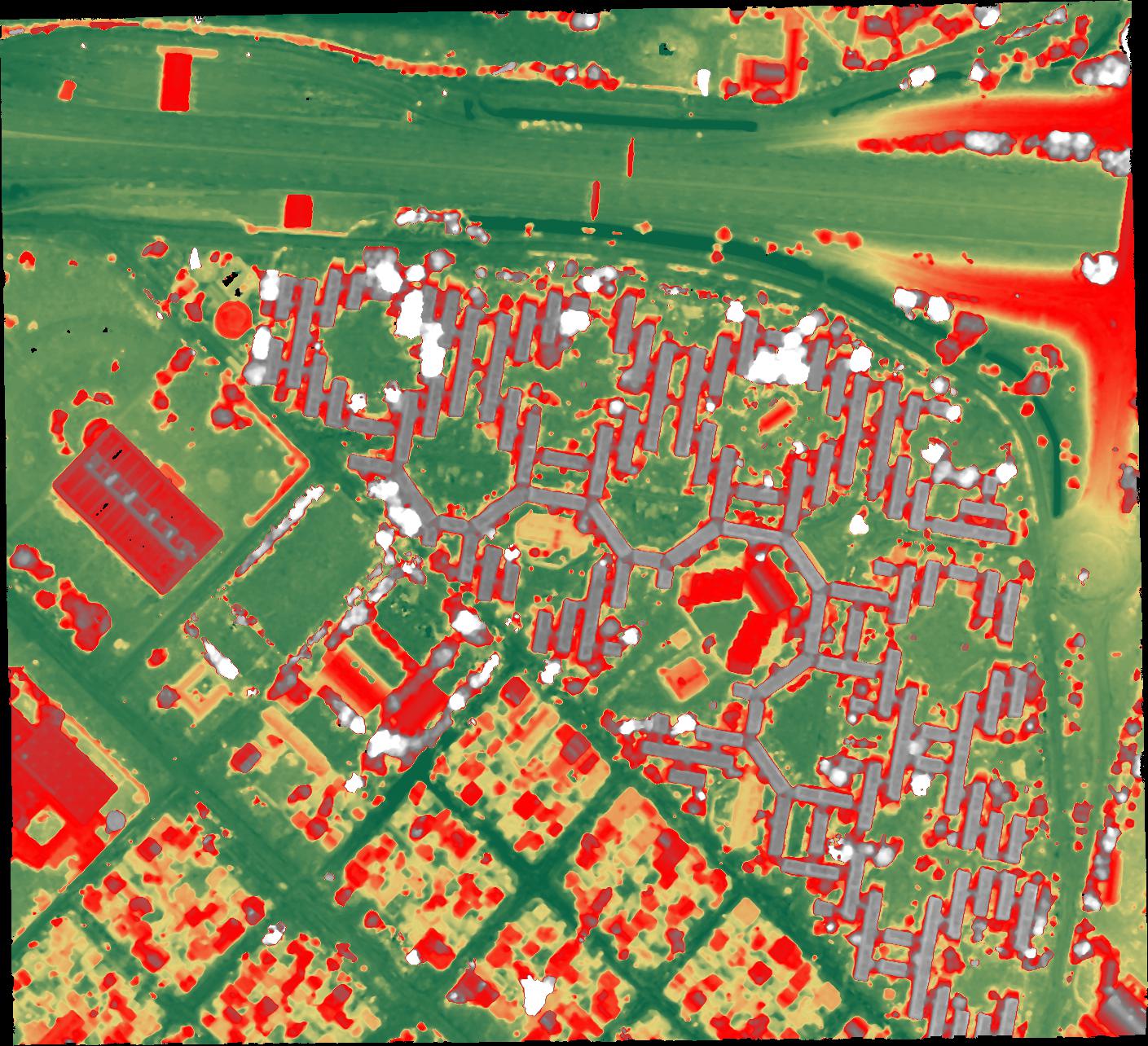

Gallery

Jacksonville, Florida, USA (37 images)

Acknowledgements

The research is based upon work supported by the Office of the Director of National Intelligence (ODNI), Intelligence Advanced Research Projects Activity (IARPA), via DOI/IBC Contract Number D17PC00287. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the ODNI, IARPA, or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright annotation thereon.